Webinar: AI Risk Management and Trustworthiness for Healthcare Organizations

When: September 25, 2025 - 12:00 PM CDT

2 min read

![]() Palindrome Technologies

:

Dec 30, 2025 4:18:13 PM

Palindrome Technologies

:

Dec 30, 2025 4:18:13 PM

When: October 22, 2025 - 12:00 PM CDT

We are excited to offer this training session in collaboration with Society of Corporate Compliance and Ethics (SCCE) and Health Care Compliance Association (HCCA).

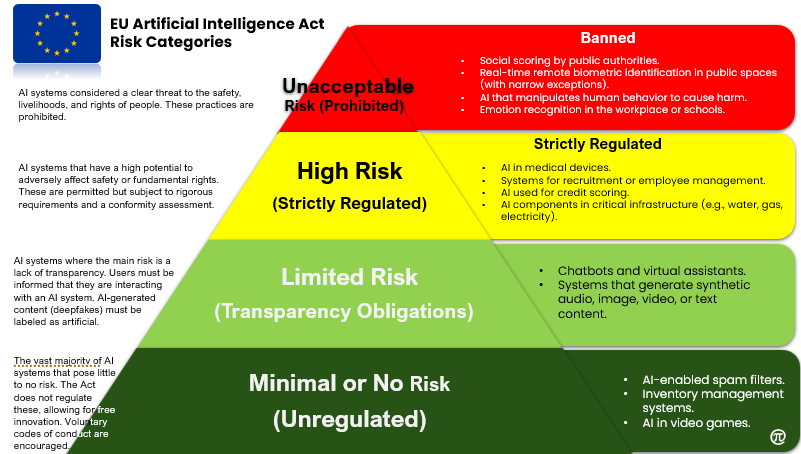

The European Union's AI Act (Regulation EU 2024/1689) establishes the world's first comprehensive, horizontal legal framework for artificial intelligence, creating a de facto global standard that fundamentally re-architects the AI lifecycle. This analysis deconstructs the Act from a computer science perspective, translating its legal mandates into concrete engineering and strategic challenges. The regulation is built upon a risk-based pyramid, reserving its most stringent obligations for "high-risk" systems, for which it codifies four technical pillars:

Furthermore, the Act introduces a novel, tiered regulatory regime for General-Purpose AI (GPAI) models, using a computational threshold (training with over 1025 FLOPs) as a primary proxy for identifying models that pose "systemic risk." This transforms the AI supply chain, creating a cascade of liability and due diligence obligations from foundation model developers to downstream providers. For organizations, the strategic imperative is to view the Act not as a compliance burden, but as a framework for building trustworthy, defensible, and market-leading AI. This requires a paradigm shift toward integrated, "compliance-by-design" approaches, focusing on unified governance, operationalizing technical compliance in MLOps, investing in AI security research, re-architecting systems for explainability, and strategically managing the new complexities of the GPAI supply chain.

Key Takeaways for the Talk

Register HERE to attend the webinar

Also check out our blog on Managing Risk in Artificial Intelligence Systems-A Practitioners Approach 2025

When: September 25, 2025 - 12:00 PM CDT

Lucas Driscoll presents "Security and Compression", a talk on the cybersecurity risks caused by using compression, especially in a web context. Lucas...

When: Apr 16, 2025 from 17:00 to 18:00 (ET) This is a free webinar is sponsored by NJ ISACA and attendees are eligible for 1 CPE